Unlike our other senses, touch acts on our feelings as a personal sensation generated within ourselves. For example, when seeing beautiful autumn leaves, we do not think of the effect of feeling that they are pretty as being something that occurs within ourselves. However, when we stroke a pet, we feel calmer and find even just looking at it soothing. This is because touch and vision stimulate each other, causing feelings in our brain. Stimulating emotions in this way is one element of the sense of touch.

Special Feature 1 – The World of Touch Touch stimulates emotions influencing vision and hearing

composition by Rie Iizuka

illustration by Koji Kominato

Just looking at something is enough to make us conjure up impressions in our imagination, such as “fluffy” or “cold.” When we see the plump cheeks of a baby, we reflexively want to touch them. Our senses of vision and touch resonate with each other in this way to create reality for us.

First, let us look at the features of touch and vision.

The way we feel touch differs considerably from the way we experience our other senses.

The sense of touch develops in humans at an earlier stage than our other senses. A study conducted by a team including Professor Masako Myowa-Yamakoshi and Associate Professor Masahiko Kawai from Kyoto University and Japan Science and Technology Agency (JST) Research Fellow Minoru Shibata found that, in sensory tests in which newborn infants were exposed to vibration stimulus, shown something, and given sounds to listen to, they demonstrated a much wider range of brain activity in response to the tactile stimulus than to the other stimuli.

The visual cortex is believed to take up about one-third of the adult brain. Although the part of the brain processing touch is much smaller, the results of the aforementioned experiment show that the sense of touch is dominant in newborns. In adults, the left side of the brain reacts to stimulus of the right hand, while the right side of the brain reacts to stimulus of the left hand. However, in newborns, both sides of the brain demonstrate activity in response to stimulus of only one hand. Accordingly, we can see that tactile stimuli take up most of the senses in the newborn period.

In contrast, a newborn has visual acuity of less than 20/200, with binocular vision developing after a few months. The reason why babies are surprised when we play peekaboo with them is that they cannot recognize that there is a face behind the hands hiding it, and a similar situation occurs with the sense of touch. If an adult holds both ends of a plastic bottle, they can tell from the tactile sensation that they are holding a single plastic bottle, but infants holding something in both hands find it hard to recognize that it is a single object.

Touch differs considerably from our other senses in terms of the way we feel it. In the case of vision, we receive information with receptors in the retina, but we do not regard ourselves as seeing with our retinas. With sound, too, we do not think in terms of hearing with our eardrums. These stimuli are external to us, so we project the stimuli we have received onto the outside world, do we not? On the other hand, when it comes to the sense of touch, although the brain processes stimuli occurring on the skin, for some strange reason, we regard these tactile stimuli as personal sensations occurring within us. In psychology, touch is called a near sense, while vision and hearing are referred to as far senses. There are major differences between touch, on the one hand, and vision and hearing, on the other, in terms of our consciousness of their spatial location.

In one of his books, the philosopher Markus Gabriel uses the term “make transparent.” Our selves, which are meant to be doing the feeling, make transparent. That is to say, he points out that we lack consciousness of feeling things. He also says that this is particularly true of vision and hearing. I believe that, in the case of vision and hearing, this is because we sense the depth in stereo (with both eyes or ears), so the perception is externalized, resulting in a stronger tendency for the self doing the feeling to become transparent. In contrast, this process of making transparent is harder to achieve with touch. If we prick ourselves on a rose’s thorn, it is us who experience pain —— we do not think in terms of the pain existing in the thorn. However, if the thorn was green, it is hard for us to think, “I am sensing that it is green.”

The relationship in which touch and vision stimulate each other

This relationship in which touch and vision stimulate each other has been treated as almost self-evident in the art world.

The British painter Francis Bacon created many works featuring distorted faces. These paintings evoke a tactile sensation in the viewer, as though one had squashed the faces in one’s hand. In the field of scientific research into sensation, there is a tendency to compartmentalize it into each sense, such as vision, touch, smell or taste, but in the arts, it has long been argued by many that conveying tactile impressions in the viewed works is important. Perhaps they could see that touch complements vision and leads to the creation of a variety of realities for us. In the words of Gabriel, reality is when that process of making transparent does not occur —— in other words, it becomes stronger when the self actually feeling it manifests itself. That is precisely why it is hard for the sense of touch to make transparent and why it is more prone to making us feel reality. By making his paintings distorted, Bacon ingeniously evoked tactile sensation, preventing the painting (vision) from making transparent and imbuing it with reality.

There are many experiments that demonstrate scientifically that touch, vision and hearing mutually influence.

For example, there is the attention experiment conducted by a team led by Charles Spence at the University of Oxford in the UK (Table). This experiment looks at how long it takes for a person whose attention is biased toward one sense to become conscious of another sensation and react to it. The test subject holds a smartphone-like device in both hands and pushes a pedal when it vibrates, makes a noise, or lights up. In the first part of the test, each stimulus is administered the same number of times in order: tactile three times, visual three times, and auditory three times. Then the stimulus is administered with a bias toward one particular sense, for example, tactile eight times, visual once, and auditory not at all. When this takes place, subjects who have received stimuli biased toward visual or auditory stimuli react to other stimuli without delay, but those whose stimuli were biased toward touch react more slowly to visual and auditory stimuli.

| Target Modality | Expect Auditory | Expect Visual | Expect Tactile | Divided Attention*1 | |

|---|---|---|---|---|---|

| Auditory | Reaction Time (ms) | 487 (18) | 524 (18) | 556 (22) | 497 (19) |

| Attention effect*2 | 10 | -27 | -59 | ||

| Visual | Reaction Time (ms) | 526 (18) | 488 (15) | 573 (25) | 497 (19) |

| Attention effect | -29 | 10 | -76 | ||

| Tactile | Reaction Time (ms) | 556 (20) | 549 (22) | 513 (21) | 507 (22) |

| Attention effect | -48 | -42 | -6 |

Table. Auditory, visual, and tactile reactionsWhen paying equal attention to vision, hearing, and touch, a person can react to visual (light), auditory (sound), and tactile (vibration) stimuli in around 500 milliseconds[ms] (divided attention). However, when a person is expecting tactile stimulus, but receives auditory or visual stimulus, their reaction time is 556 ms and 573 ms, respectively. In the case of visual stimulus, this represents a delay from the divided attention conditions (497 ms) of 573 – 497 = 76 ms. In the world of sensory reactions, this is a big difference.

(Excerpt from an experiment conducted by a team led by Charles Spence at the University of Oxford)

- *1 Divided attention: Results when the auditory (sound), visual (light), and tactile (vibration) stimuli were presented the same number of times.

- *2 Attention effect: Attention effect size showing the difference from the divided attention conditions. + indicates that the reaction was accelerated, – that it was delayed.

In other words, once our attention is focused on touch, it becomes harder for us to switch to other stimuli. The difference between near and far senses is probably one of the ways in which this can be interpreted.

However, touch is a sense whose existence we can forget if we stop paying attention. Whereas we are not constantly conscious of the parts of our body touching a chair when we are sitting down, when it comes to vision, we do not stop seeing the desk just because we are looking at our computer monitor.

Vision, hearing, and touch sometimes act upon each other to create perceptions that differ from reality.

In an experiment called the double flash illusion, when a white light flashes and a beep or other noise sounds twice at the same time, it appears as though the light flashed twice, even though it only flashed once. The same thing happens with touch. When a light flashes and a finger is stimulated twice at the same time, it appears as though the light flashed twice. This misperception is quite distinct and most people state firmly that the light flashed twice. A sound-based demonstration of this phenomenon can be found at the NTT Communication Science Laboratories website (http://www.kecl.ntt.co.jp/IllusionForum/a/doubleFlashIllusion/ja/), along with an explanation.

Using vision and touch to perceive our own bodies

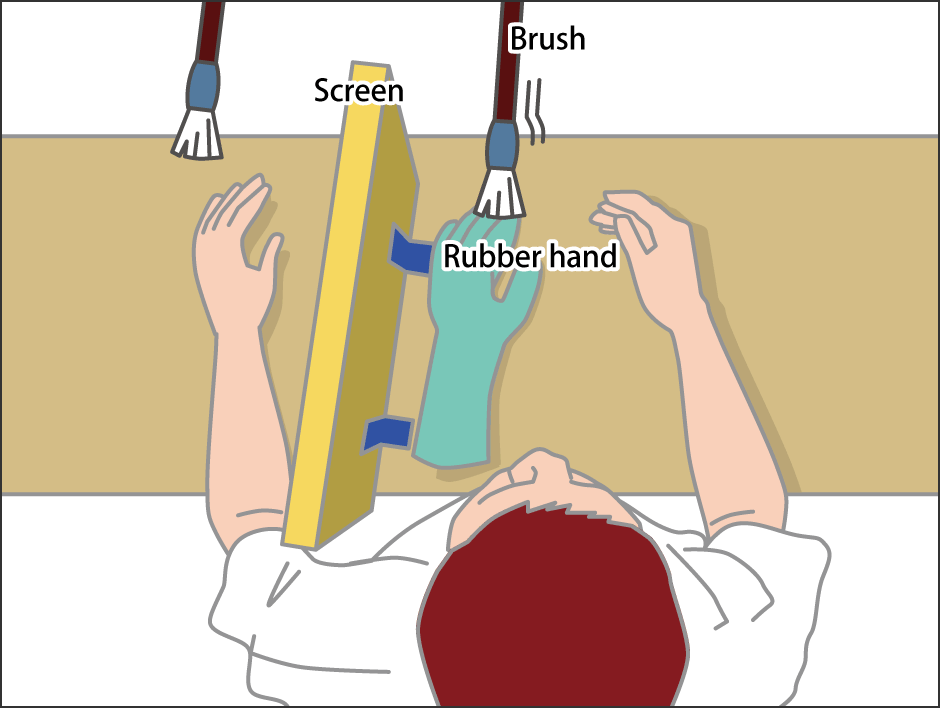

There is an interesting phenomenon called the rubber hand illusion, which relates to the misperception of touch (Figure 1).

Figure 1. The rubber hand illusionThe longer the rubber hand is in the subject’s field of view, the more it comes to feel like the subject’s own hand.

One hand is placed on the other side of a screen so that the subject cannot see it. Then a rubber hand is placed on the side of the screen where the subject can see it. After the subject has maintained this position for a while, the rubber hand is touched with a brush and the subject feels as though it is their own hand being touched. In addition, when the subject is then asked to point at their own hand, those subjects with a greater sensation of the rubber hand being their own tend to point to a spot closer to the rubber hand than to their actual hand. This experiment attracted a great deal of interest and subsequently even began to be used in the treatment of obsessive-compulsive disorder.

What we can see from this is that we use vision and touch to perceive our own bodies. We can tell our own posture and joint position even with our eyes closed. This is called proprioception, but if the subject were aware of their body in the usual way, this illusion should not occur, even with the rubber hand placed there. However, it seems likely that proprioception actually diminishes while one is maintaining the same posture.

We do not usually look at our hands from time to time to check that they are there, but we are aware of our hands when we see them. That is probably why, when the rubber hand is within our range of vision, proprioception wanes and we attach greater importance to what we can actually see.

Let us look at the way in which these senses work together, from the perspective of brain activity.

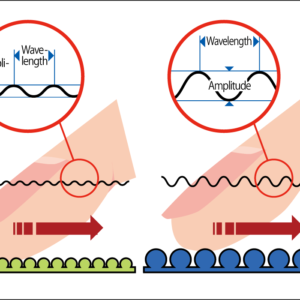

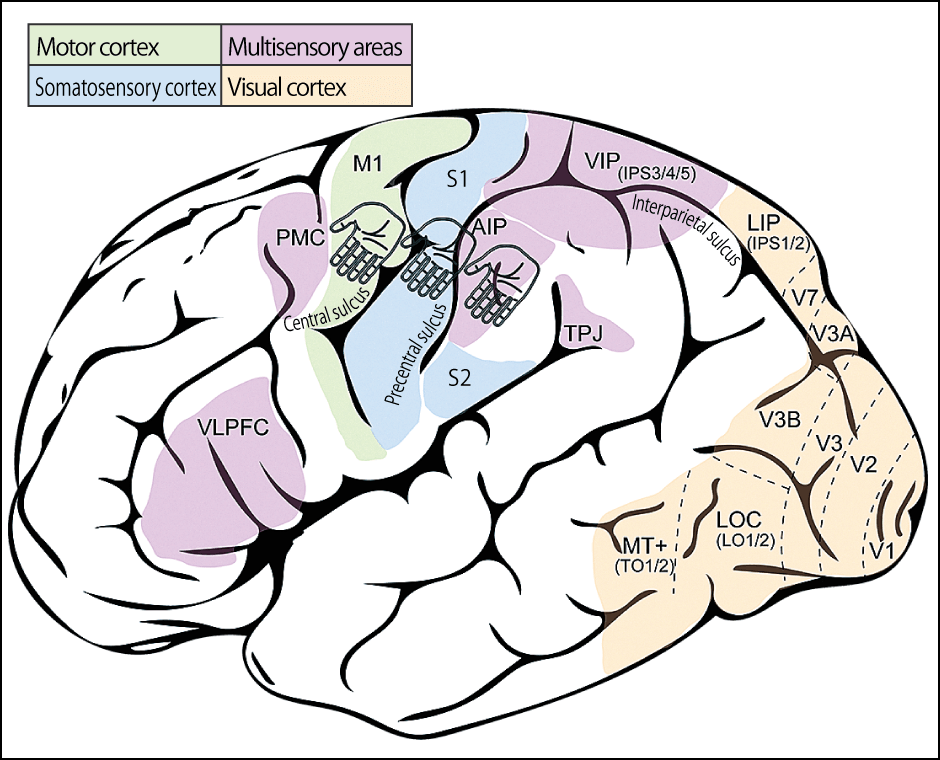

We broadly understand which parts of the brain become active in response to visual or tactile stimuli, but research has found that there is a phenomenon in which the somatosensory cortex becomes active in response to visual stimuli and the visual cortex is activated by tactile stimuli (Figure 2).

Figure 2. Regions of brain activityTouch is projected via nerves distributed throughout the body and conveyed to S1. S2 is said to perceive rough and smooth textures. When touch stimuli are added, activity is seen not only in these regions, but also in the somatosensory cortex and nearby association area. The association area reacts to various stimuli. It is the orbitofrontal cortex that feels pleasant sensations when we are touched. Visual and tactile information are said to be integrated in the multisensory area between the orbitofrontal cortex and the top of the head.

It is thought that experience is a factor contributing to the occurrence of this phenomenon. After a monkey has been given the experience of touching fur, metal, glass, ceramics, leather, and various other items, there is a change in activity in its visual cortex when it then looks at those items. Touching something can cause changes in the visual cortex.

After analyzing the brain activity of monkeys in this experiment based on looking and touching, a team led by Assistant Professor Naokazu Goda from the National Institute for Physiological Sciences was able to determine whether a monkey was looking at something ceramic or something wooden, just by observing the activity of its visual cortex at the time. As the researchers were unable to tell what the monkeys were looking at from their brain activity before giving them the experience of touching the items, we can see that the experience of touching the items stimulated not only the somatosensory cortex, but also the visual cortex.

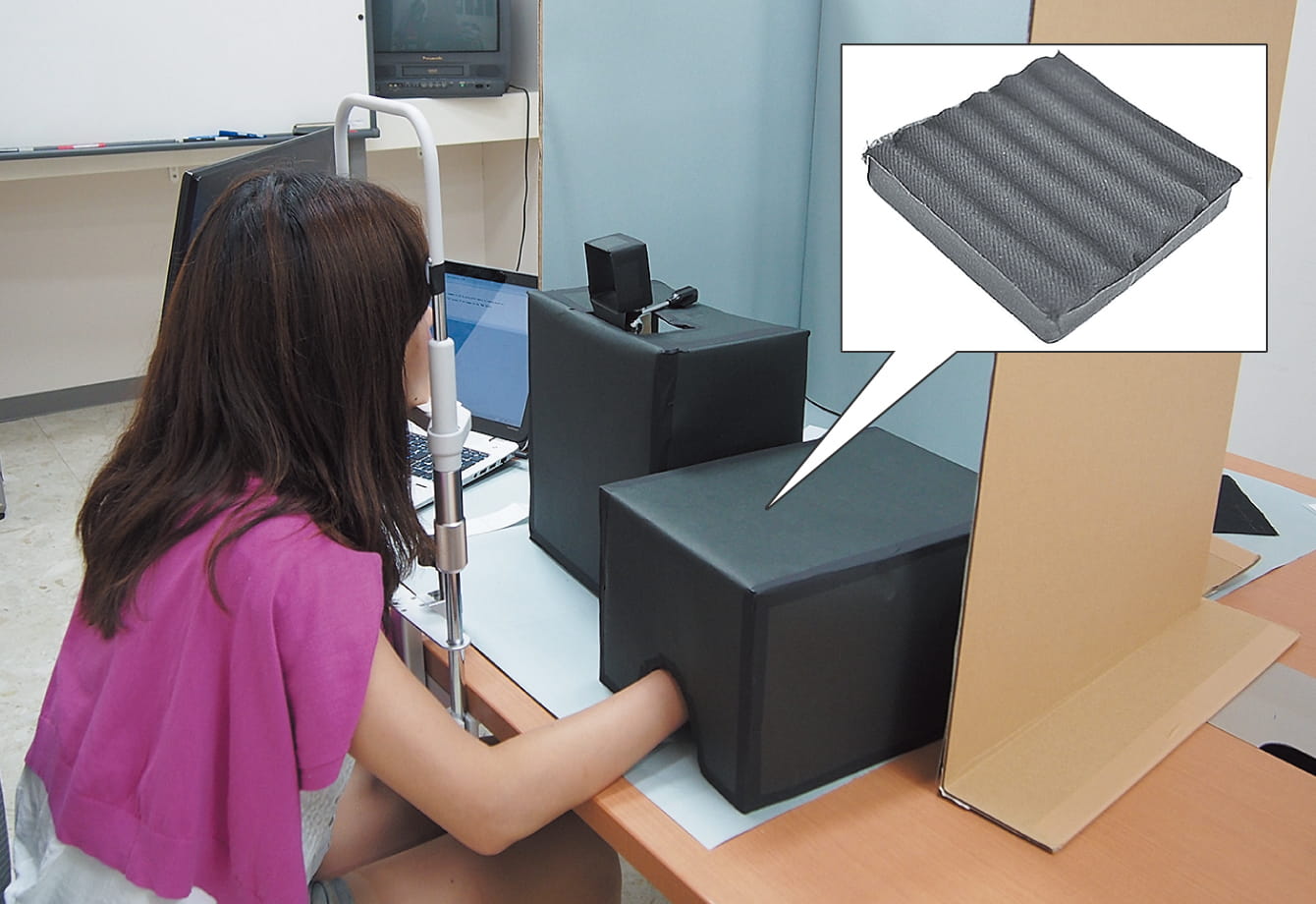

I conducted a slightly more complex experiment with humans in partnership with the Kyoto Institute of Technology (Figure 3). We prepared a number of different types of black fabric, of the kind used to make suits, and then had the subjects touch them while blindfolded. We then had them look at the fabrics that they had previously only touched and had them say whether or not those fabrics were the ones they had touched before. We also had the subjects complete a task in which they looked at the fabrics without touching them and tested them in a similar way on whether or not they had seen them before. The percentage of correct answers was much higher in the first test than in the second. Although the subjects had only touched the fabric without having seen it, the tactile information supplemented their visual judgments. I call the phenomenon whereby touch and vision interact with each other “visuotactile perception.”

Figure 3. Visuotactile perception experimentPeople develop the ability to determine whether a material that they are seeing for the first time after having only touched it before actually is the material that they touched.

In addition, while not relating to touch and vision, intersensory coordination is a known phenomenon in the field of psychology, suggesting that hearing and vision influence each other.

The five senses work together and are integrated by the brain

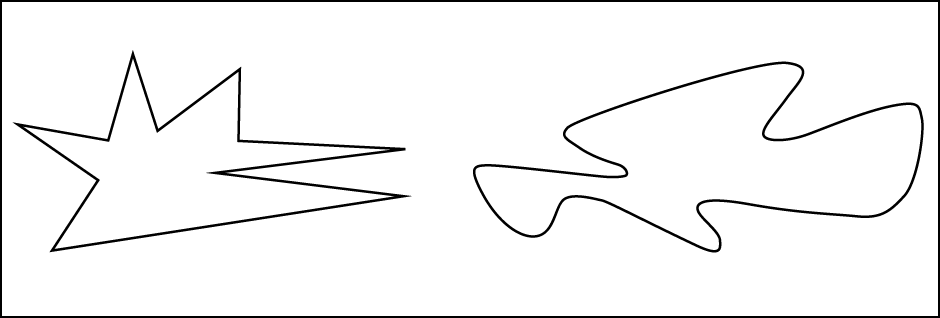

Take a look at Figure 4. If these two shapes had names, which do you think would be called Bouba and which Kiki?

Figure 4. Which is which?This experiment in assigning the names Kiki and Bouba is from a study by Ramachandran and Hubbard at the University of California in the U.S.A. Even if these particular drawings are not used, there is a high probability of gaining similar results when an angular shape is placed next to a curved one.

A study found that 90% of people replied that the one on the left was Kiki and the one on the right, Bouba. In fact, the words Kiki and Bouba have no relationship whatsoever to the shapes. The fact that our senses choose particular combinations nonetheless would seem to be a very strange phenomenon. It seems that our senses work together in this way to assist us in understanding our circumstances.

Research into sensory deprivation flourished in the 1950s and 1960s. In an experiment conducted by Donald O. Hebb of McGill University in Canada, the test subjects were laid flat on a bed with their hands and eyes covered, and noise with a uniform frequency (white noise) was played, in order to observe what effects minimizing all external stimuli had on humans. The results showed that subjects experienced hallucinations, became more suggestible, and suffered cognitive decline. Extreme reductions in tactile, visual, and auditory stimuli, among others, cause anxiety by making a person’s existence become ambiguous.

As we have seen above, vision, touch, and the rest of the five senses work together to communicate information, which is then integrated in the brain. In particular, touch has elements that stimulate emotions and it appears to influence vision and hearing in unexpected ways. With tactile stimuli reduced at present for various reasons, it might be wise for us to gain a renewed awareness of touch, even if only to reaffirm our own existence.