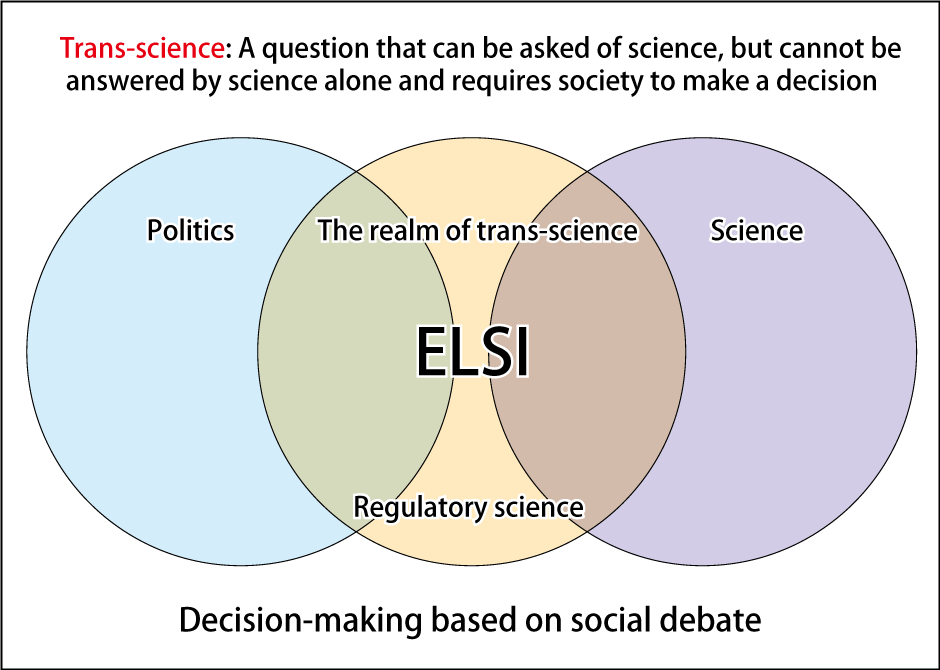

Verifying safety scientifically would take considerable time, but even without waiting for a conclusion to this question, modern society has benefited immensely through the assimilation of science. That is why we cannot look only to scientists for the correct solution when something happens. More specifically, it is society that today has the ultimate authority to make decisions and therefore, society as a whole needs to fully discuss the uses of science, rather than leaving it up to “experts.”

Special Feature 1 – Is Science Communication Good Enough? Risk communication is a dialogue between scientists and the public

composition by Rie Iizuka

The accident at the Fukushima Daiichi Nuclear Power Plant caused by the Great East Japan Earthquake will go down in the annals of human history. Bewildered, we sought the views of experts, but even they could not agree about a situation of which they had no previous experience. While some said that experts should speak with one voice, others were of the opinion that it did not make sense for experts to be unable to speak freely and we ended up in a chaotic situation in which everyone voiced differing views.

When the COVID-19 pandemic broke out, I had the feeling to start with that exactly the same thing was occurring. However, one thing that is different is that some members of the government’s expert panel have spoken out in the form of COVID-PAGE , the Public Advisory Group of Experts. It may well have been an expression of their desire to appeal to the public based on their intellectual responsibility as experts participating in a government expert panel, but without being influenced by the government’s wishes.

Can scientists be held accountable for outcomes?

Bearing in mind the experiences of the Fukushima nuclear power plant accident, perhaps the COVID-PAGE members thought it would be hard for experts to convincingly educate the public about the current situation if it came in the form of an immutable dictum.

When a problem occurs in society, the best model is regarded as being for scientists to present several options and for policymakers to select one of those options based on a comprehensive perspective. It is true that there are some situations where science can obtain precise, objective figures and present error-free findings based on them.

On the other hand, many problems cannot be resolved so simply. Responses to both the nuclear power plant accident in Fukushima and the COVID-19 pandemic make us painfully aware that we no longer live in an age in which scientists can provide us with ready answers. So can scientists be held accountable for the ultimate outcomes even if we seek advice from them? I call this kind of issue a trans-scientific question.

The term “trans-science” was coined in the 1970s by the American nuclear physicist Alvin M. Weinberg (Figure 1). He said that while all experts would agree that a catastrophe would occur if every one of the multiple safeguards in a nuclear reactor failed at once, the question of whether all those multiple safeguards could fail at once and whether measures to counter that situation should be taken is trans-scientific, as the experts disagree and the question transcends science. This means that while it is a question that can be asked of science, it is not one that science alone can answer.

Figure 1. What is trans-science?Trans-scientific issues such as genetic modification, nuclear power generation, nanotechnology, and ICT will permeate society increasingly deeply in the future. A useful concept in resolving these issues is ELSI (ethical, legal, and social implications). While scientific and technological advances such as genome editing have brought about explosive growth in the range of things we can do, we are disregarding considerations of whether we should, must, or must not do them. Consideration of this concept is imperative in seeking to use science and technology in society.

(Source: Kobayashi T. 2007)

Weinberg also said that such questions would likely proliferate in the future. Funnily enough, as Weinberg was a nuclear physicist and nuclear engineer, he often highlighted accidents relating to radiation and nuclear power plants. I was lost for words when I realized that the 2011 Fukushima nuclear power plant accident had occurred in exactly the same structure that he had mentioned back in the 1970s.

The structure of science mirrors the structure of society

Weinberg was a seasoned nuclear physicist. In his article on trans-science, he made some deeply interesting observations. According to Weinberg, U.S. nuclear power plants were “loaded down” with more safety systems than nuclear power plants in the Soviet Union. He said that, from an expert’s perspective, such extensive systems were unlikely to be necessary. Looking at the origin of this difference between the Soviet Union and the U.S., Weinberg noted that the Soviet Union had no social mechanism for including the views of anyone other than engineers or other experts during such systems’ design and implementation phases. However, the U.S. democracy has mechanisms for incorporating the views of various stakeholders in the social implementation process. In other words, differences in social structures are reflected in the extent of the safety systems. “The added emphasis on safety in the American systems is an advantage…and in so far as this can be attributed to public participation in the debate over reactor safety, I would say such participation has been advantageous,” Weinberg said.

A process such as the American one might bring forth views not corroborated by expert knowledge. Should that occur, scientific experts should speak out as accurately as possible based on their own expertise. However, I believe that there is a high risk of developing tunnel vision when experts have the final say on decisions. So, I can say that the structure of science and that of society are isomorphic.

In the 1990s, when debate over genetically modified crops was raging in the U.K., virtually the entire public became hostile toward science. In 2000 or thereabouts, after the uproar had subsided, Sir Robert May, Chief Scientific Adviser to the British Government, said that the concerns being expressed “are not about safety as such, but about much larger questions of what kind of a world we want to live in .” It is up to society to decide how we use science and technology.

In the field of science communication, the approach of educating the public based on the belief that discord arises from a deficit in their understanding of the correct scientific knowledge is called the “deficit model.” Today, it is regarded as a mistake to which scientists are all too prone. However, quite a few researchers still struggle to extricate themselves from this model.

There is some overlap in the history of science communication and risk communication; the latter concept began in the 1970s (Figure 2). There have been a few phases through to the present day. Phase I focuses on communicating probability and other figures relating to risk. In Phase II, importance is attached to communicating not only the figures, but also their significance. This includes telling people, “This is the probability of being involved in a traffic accident in the course of a year, so the risk is low compared with that,” for example, or trying to convince “non-experts” that the advantages outweigh the risks, with statements such as “Yes, there is a risk of traffic accidents from using cars, but your freedom of movement increases dramatically.” However, neither approach secures public understanding.

Figure 2. Reflections on risk communicationWhile the rest of the world has gone through this process, Japan is, unfortunately, still stuck in Phase II.

(Source: Kobayashi T. 2007)

Treat the other party as a partner

So the next approach was to explain things carefully in an orderly sequence, like some kind of commercial, but this actually aroused suspicions that the experts were trying to fool people somehow.

At the end of the 20th century, we entered the phase of treating the other party as a partner. So what actually constitutes a partner? I often quote the excellent explanation given by Professor Kazuya Nakayachi of Doshisha University.

Let us assume I am going to go and buy a pair of trousers. But my wife does not trust my instincts when it comes to shopping, so she tells me she is going to come with me. When I ask my wife what she thinks about a pair, she scrutinizes them carefully, looking at the material and the price, and then tells me that I should look for something a bit different. This is the basic template for the kind of communication necessary when relationships of trust have broken down.

In other words, scientists present a first draft and ask the public what they think, but the public will not necessarily accept that proposal without objection and might respond with a request to think of something else. In that situation, experts must be prepared to respond to their wishes in good faith. That is what treating the other party as a partner means.

Scientists, as experts, must also make the effort to be candid and admit what they do not know. Being an expert means being able to explain your field of specialism to non-experts accurately and in a manner easily understood by a lay audience, being able to explain the significance of your specialism to society, and being able to describe the boundaries and limitations of your specialism. I believe that being able to do these three things is the precondition for being considered an expert.

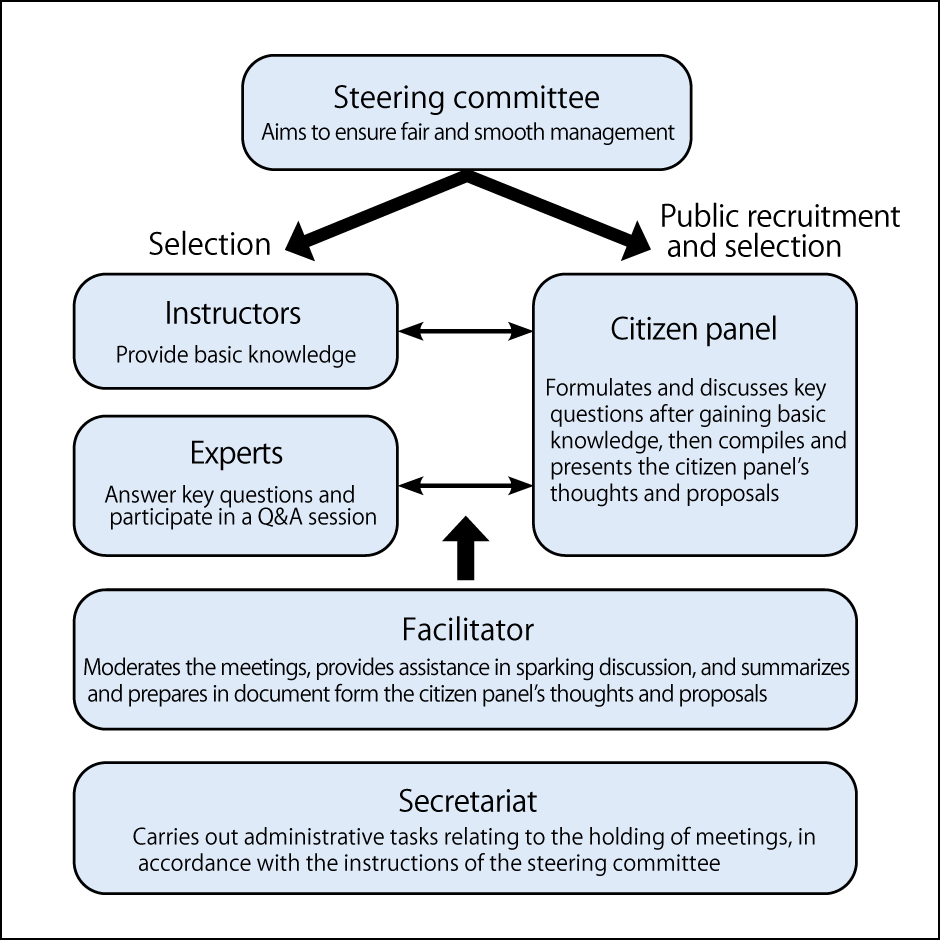

In 1994, I had the opportunity to view preparations for a consensus conference in the U.K. and found it highly interesting. A consensus conference is one technique for undertaking a technology assessment with public participation. It is a forum at which the public and experts spend time discussing a scientific issue that is socially controversial, such as genetic modification technology (Figure 3).

Figure 3. How consensus conferences workA meeting of the Consensus Conference on Genetically Modified Crops held in 2000. Concerns about whether the “non-experts” would be interested in the specialist topics proved unfounded and the citizen panelists were proactive in speaking up, with some stating in their feedback that there was not enough time to debate the issues.

The organizer of the British conference was Professor John Durant, the first editor of the academic journal, Public Understanding of Science. He described the consensus conference as a new social contract for science. I think what he meant, was that under the “old contract,” scientific and technological advances would be implemented if experts thought they were right, and members of the public were regarded only as consumers. However, things were going to be different in the society of the future.

After returning home, I held a number of consensus conferences, which attracted some degree of interest. A number of countries subsequently rolled out technology assessment techniques that included consensus conferences. In the course of this process, Japan became one of the world’s foremost proponents of the science café approach, where the public can participate in lectures on the topic. However, in Japan, such initiatives still tend to be regarded as forums at which scientists teach the general public about scientific achievements, with little perception of them as a model for dialogue between scientists and the public, as far as I can see. The root of this lies in society’s misconception of science.

The possibility of science making the wrong decision

At consensus conferences, several experts freely give their opinions on the same theme and sometimes their views are diametrically opposed. Members of the public seeing this at Japanese conferences were astonished to see such a variation in opinions between one expert and another, but I myself was actually surprised by this feedback. The public tends to think that science has clear-cut answers. However, if there was an answer to every single scientific question, there would be no scope for scientists to conduct research. I want people to know that seeking black and white answers from science will just lead to increasingly fruitless discussion.

Science is undoubtedly a very powerful intellectual tool, but it takes a correspondingly long time to make a stable contribution to society, whereas the time frame within which society has to make decisions is much shorter. As such, there is always a possibility of science making the wrong decision.

Scientists should say what they do not know and inform the public as accurately as possible, based on their responsibility as experts. Of course, even experts make mistakes. When this happens, the public should not condemn all scientists as incompetent because of one mistake. Society needs to accept this aspect of science as well.

So what should we do when undertaking the social implementation of science and technology? I believe this is a difficult question, but to describe it in terms of the theory of risk, nothing is ever free from risk, so the probability of failure is never zero. Accordingly, why not think about it conversely as a question of what the acceptable level of failure is?

In the West, this has been referred to as the “least regret policy.” To put it another way, if we have done all the things we should have, but have failed nonetheless, then it cannot be helped and failure is therefore acceptable. However, if we are unknowingly saddled with risk and suffer harm because of a failure to do everything that could have been done, then it is perfectly reasonable for people to feel angry. As no solution can guarantee absolute peace of mind, we need to engage in full consultation, based on a best efforts approach.