Scientists have discovered that, rather than operating independently, our five senses —— sight, hearing, taste, touch, and smell —— affect each other while creating a single sensation. Research now underway aims to use virtual reality (VR) technology to shed light on and apply the “cross-modal perception” gained in this way. Scientists say that making various senses intersect makes it possible to create an environment in which people can demonstrate their abilities more readily. They have also found that this gives rise to positive psychological effects.

Special Feature 1 – “Human Augmentation” Technology Using VR to influence the senses, creating an environment conducive to demonstrating abilities

composition by Takeaki Kikuchi

The five senses were previously considered to be independent of each other. For instance, as sight and hearing are processed in different parts of the brain, it was thought that information heard by the ears had no relation to the processing of sight. But in reality, there is no question that the five senses affect each other. For example, when we eat a meal, our perception of the flavor is formed not solely by the taste sensed by our tongue, but rather by the integration in our brain of information gained using all five senses, including the food’s arrangement on the plate and other aspects of its visual appearance, its aroma, and its feel when we put it in our mouth. As such, we have discovered that sensations experienced simultaneously influence each other.

The senses adjust to each other as they process information

Ventriloquism provides us with an easily understood example of this. The mouth of the ventriloquist’s dummy opens and closes. A voice emerges while the dummy’s mouth is moving. Although the voice comes from a human, it feels as though the voice is also emerging from the dummy, because we are tricked by the visual information of its mouth moving. Even when there is a slight discrepancy between sight and hearing, the human brain skillfully combines them to create a single sensory phenomenon. Even though we have heard the voice emerging from the human, the brain adjusts the perception so that it seems as though we have misheard and the voice is actually coming from the dummy. This phenomenon in which the senses adjust to each other as they process information is called cross-modal perception; I am engaged in ongoing research aimed at using cross-modal perception to elicit various sensations. My specialist field is virtual reality (VR).

Probably the most readily understandable part of our research is altering taste by making the senses intersect.

In the case of taste, a number of techniques are already employed in the food industry. For example, take the syrups used to flavor shaved ice. They come in an assortment of flavors, including strawberry and melon, but in fact, all the syrups taste exactly the same. However, the use of colorings and flavors affects our perception of the taste, which means that we sense them as tasting of strawberry or melon or whatever. Elderly people’s ability to taste things is said to decline, but in fact, it is apparently the sense of smell that wanes, rather than the sense of taste. Accordingly, when preparing meals for elderly people, giving dishes quite strong aromas makes it easier for seniors to taste them. This strategy is already used in reality.

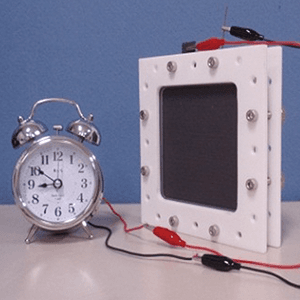

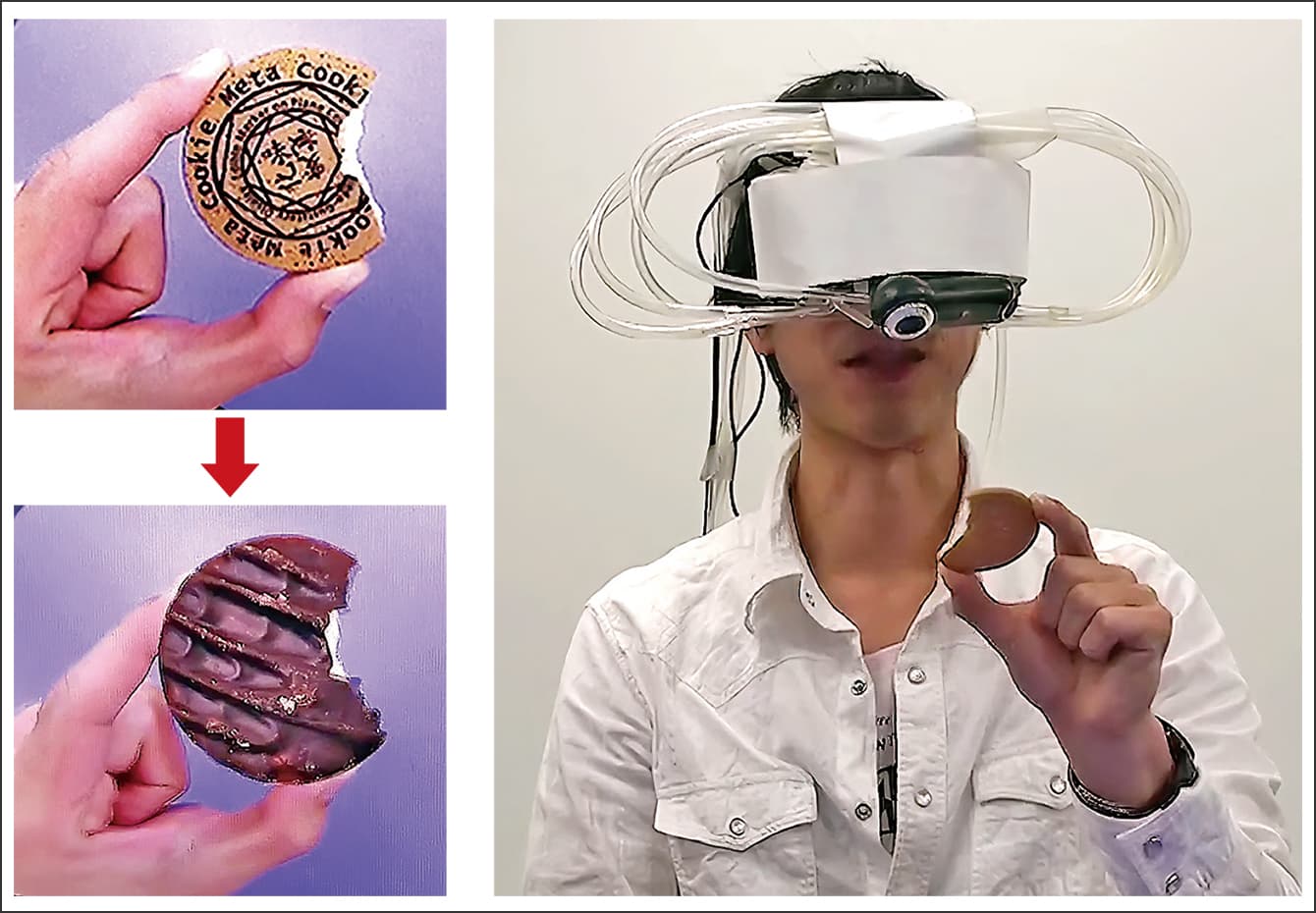

We are using VR in research into this kind of cross-modal perception of flavors. In my case, the first thing I did was to create a system called Meta Cookie. In this system, when a person puts on a head-mounted display (HMD) and eats a cookie, they perceive it as chocolate-flavored, even though it is actually a plain cookie (Figure 1). The cookie is imprinted with a pattern that a computer can recognize. In addition, when viewed through the HMD, the cookie looks just like a chocolate cookie. At the same time, it emits a chocolate scent. Using this kind of mechanism, the person eating the cookie perceives it to be chocolate-flavored, even though what they are actually eating is a plain cookie. In our survey, at least 80% of those who ate the cookie responded that it was chocolate flavored. Similarly, we can give it a strawberry or almond flavor. This means that people can enjoy a variety of different flavors and not get tired of what is actually just a single type of plain cookie.

Figure 1. Changing flavors with Meta CookieWhen viewed through the HMD, the plain cookie in the upper left image looks like a chocolate cookie, as seen in the lower left image. A chocolate smell is emitted when the subject eats the cookie, causing them to perceive it as chocolate flavored.

In addition, we can make the flavor more delicate or intense. As we can give even something that is not very sweet an intensely sweet flavor, we can reduce its sugar content and therefore lower its calorific value. Moreover, by making people perceive something as very salty, we can get them to eat meals that are low in salt.

Perception of satiety is influenced by factors including sight

By using VR to modify sight, we can also alter feelings of satiety. People gain satiety from changes in their blood glucose level and the sensation of a full stomach. However, the sensations of internal organs are quite ambiguous. For example, if someone asks you, “Are you hungry?”, you might suddenly become aware of an empty stomach, even though you had not felt hungry until that point. Or if the person with whom you are dining eats a lot, you might eat more than you usually do, as though keeping up with them.

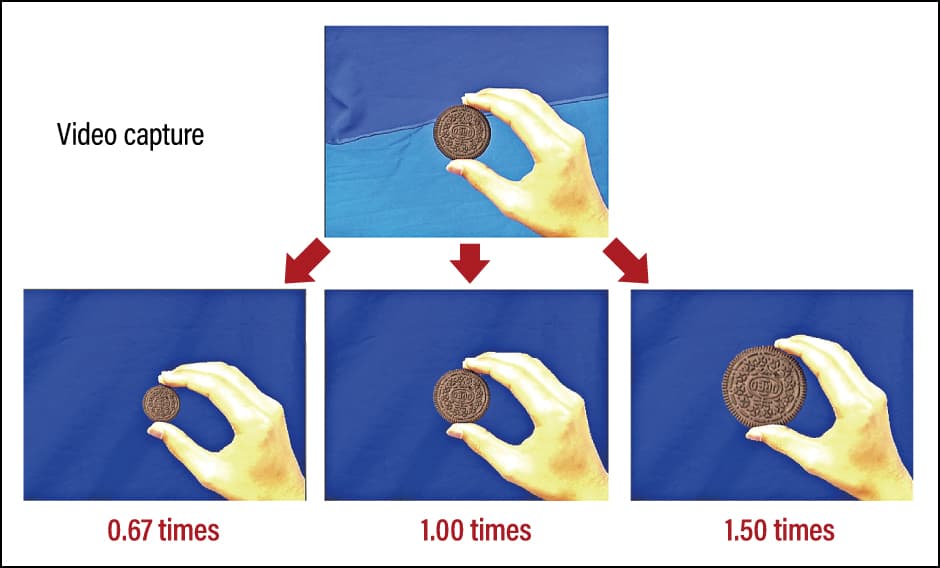

We conducted an experiment in which we showed people cookies whose size we had altered using VR, in order to influence their stomach’s sensations (Figure 2). Although all the cookies were actually the same size, the quantity that people ate declined by approximately 9.3% on average when the cookies were made to look larger than they were, while the quantity eaten increased by approximately 13.8% on average when they were made to look smaller. We found that the perception of satiety is not determined by the actual amount eaten and blood glucose level alone, but is also influenced by factors including sight. We named this “augmented satiety.”

Figure 2. The relationship between perceived size and satietyThe size of the cookie looks different when viewed through the HMD. This brings about marked changes in the perception of satiety. One person felt full after eating 13 of the cookies when the small image was used, 11 when the normal-sized image was used, and 7 when the large image was used.

Most people would probably feel uncomfortable about using an HMD while eating a meal. There is inevitably a strong tendency to visualize VR as involving the use of an HMD to look at things, but researchers regard something as being VR if it can alter the perceived reality. There is no particular need to wear an HMD. For example, projection mapping can be used to change the size of the plate that one sees. Food served on a plate that looks large seems to be small in quantity, while food served on a plate that looks small appears to be larger in quantity. This is an application of an optical illusion called the Delboeuf illusion. The amount of food seems smallest when served so that it fits within a range with a diameter of one-third of the plate’s, and largest when served so that it fits within a two-thirds diameter range. If we change the perceived size of the plate by projecting an image of a plate, we can achieve a perception of satiety with a smaller quantity of food. This technique could perhaps be used with meals for people on a diet and people who have diseases requiring dietary restrictions. The question is how technology will be introduced to real-life settings. I believe that this is a challenge for us to address going forward.

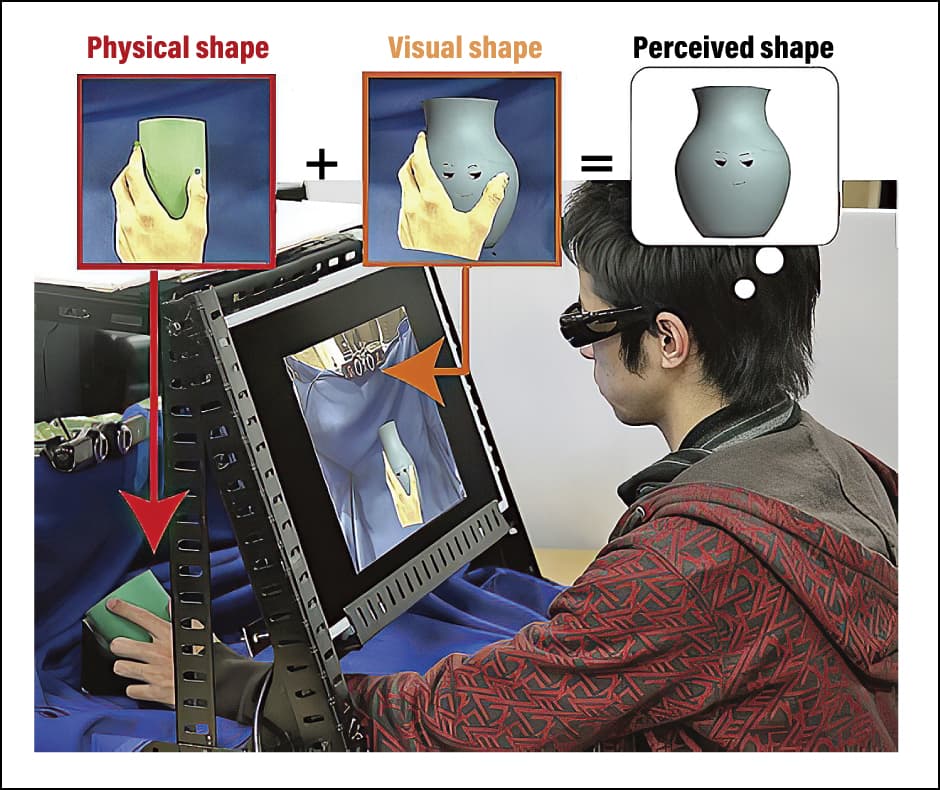

We have also conducted an experiment focused on using visual information to influence the sense of touch (Figure 3). In this experiment, a cylindrical object is placed behind a monitor, out of sight of the subject. The monitor shows images of objects with non-cylindrical shapes, such as an object shaped like a jar, or one with a depression in the middle, or one with diagonally cut sides at the top. The subject uses their fingers to touch the cylinder hidden from view by the monitor. The subject cannot see what they are actually touching and the monitor shows images of fingers touching objects with non-cylindrical shapes. When this takes place, the subject feels as though they are touching an object with the same shape as the visual shown on the screen, even though they are actually touching a cylindrical one. Applying the results of this experiment, we conducted a study with Tokyo National Museum. In this study, we projected onto a monitor images of cultural properties that cannot actually be touched, enabling people to experience touching them virtually.

Figure 3. The sense of touch is tricked by visual informationAlthough the subject is touching a cylindrical object, computer graphics are used to show them touching an object with a different shape on the monitor. In the experiment, 85–90% of people said the object had a depression in the middle or bulged outward.

A virtual hand synchronized with a real hand

By simulating virtually what it feels like to touch something, even if the object cannot actually be touched, we might become able to convey tactile sensation in online shopping. For instance, the store would have the actual blanket, while the consumer would have a simple piece of cloth. Data on the way in which the blanket’s shape changes with different degrees of pressure would be entered beforehand, so that when the consumer touched the cloth in front of them firmly, they would see an image of the blanket in the store yielding to their touch. This would enable the consumer to gain a rough perception of how soft the blanket was.

Would it be possible to use this kind of cross-modal perception in human augmentation? To find out, I conducted an experiment that applied the rubber hand illusion.

Before discussing the results, I will explain the rubber hand illusion. A hand made of rubber is placed in front of the subject where they can see it, and then their real hand is placed next to it. There is a partition screen between the two, so the subject can see the rubber hand, but not their own. First, the researcher uses a paintbrush to stroke the same place on both the rubber hand and the subject’s hand. By receiving stimuli in which sight and touch are synchronized, the subject progressively falls under the illusion that the rubber hand is their own hand. In this situation, the subject would feel pain if someone stuck a knife into the rubber hand.

With the aid of VR, we built an application system that makes it possible to simulate playing the piano with virtual hands whose movements are synchronized with the user, but whose shape differs from that of ordinary hands. Using a system we named “Metamorphosis Hand,” our team investigated how we humans accept the augmentation of the human body (Figure 4). The movements of the virtual hands are synchronized with those of the real hands. We confirmed that even when the shape of the virtual hands and the way in which they moved metamorphosed to the extent that they differed substantially from those of the real hands, they still evoked the sensation that they were part of the subject’s own body. Even when the fingers grew to around twice as long as the subject’s actual fingers, the subject soon grew accustomed to them and was able to play the piano. However, it appears to have been difficult for subjects to accept fingers increased to four times their normal length.

Figure 4. Image of a performance using Metamorphosis HandAs the user plays the piano, VR shows their fingers steadily growing longer. It looks as though their fingers can even reach parts of the keyboard that they cannot actually reach. The synchronized stimuli of sight and touch mean that even when their fingers look twice their normal length, the subject still feels as though those fingers are part of their own body.

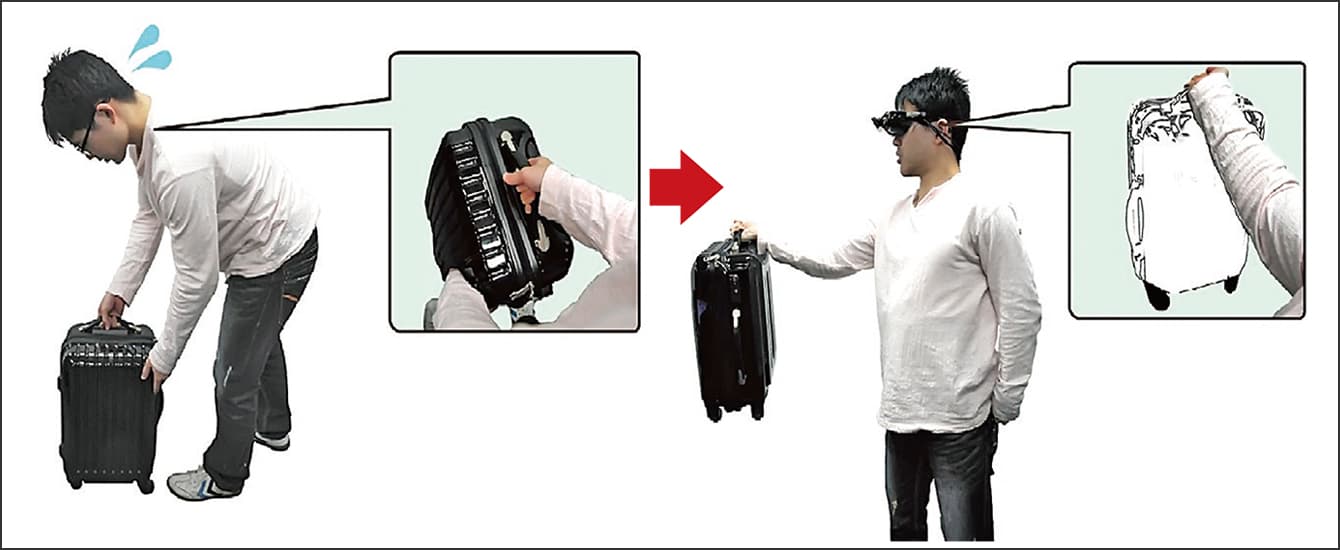

We also developed what we termed “augmented endurance”: a method of increasing people’s endurance to enable them to carry out manual labor more easily.

When humans look at a white cardboard box and a black cardboard box, they will perceive the white one as lighter, even if the two boxes are the same weight. We do not know whether this is down to an innate sensation, but the brain has the impression that brighter objects are lighter in weight, while darker ones are heavier. Accordingly, people use excess energy when lifting the black cardboard box, thinking “If I don’t put this much effort in, I won’t be able to lift it,” and, as a result, they become more fatigued than when lifting the white cardboard box. The reason why professional house movers use white cardboard boxes might be that they are aware of this rule of thumb from experience. If we leverage this illusion, we will be able to reduce fatigue in manual tasks by using VR to make objects being carried appear brighter, thereby ensuring that people do not use excess energy. In our experiments, we achieved an 18% increase in task endurance (Figure 5).

Figure 5. Brighter objects seem lighter in weightPerception of the weight of an object changes when an HMD is used to modify the color of the object being lifted to make it look brighter. Simply making the object look white increases task endurance.

How do we change mental state and performance?

Using VR technology to give ourselves a new body should encourage us to develop a new state of mind. The results of experiments using avatars have already been reported overseas.

For example, there was an experiment in which an avatar of Einstein was used to give people the feeling that they were Einstein in a virtual world. Interestingly, researchers found that subjects gained higher test scores when using the Einstein avatar than when using an avatar modeled on themselves. When trying to solve a problem and using their own avatar, subjects would think for a while and then give up if they did not know the answer. This was because they were swayed by their self-image. However, they did not give up easily when using the Einstein avatar. They would unconsciously make more of an effort than usual, thinking for longer or trying different approaches. It is conceivable that imagining how Einstein might have considered the problem caused new circuits to form in their brain and that this led to their good results.

Highly interesting results have also emerged from a VR experiment that involved playing an African drum. Subjects copied an avatar of the teacher while playing the drum, but were completely unable to drum well when their avatar was wearing a formal suit. However, when their avatar was changed to that of an African musician with an afro, they swung their arms more while drumming. Giving the subjects a new body in VR made it easier to demonstrate their latent ability in a form that suited the new body.

We tried taking this experiment a step further. Localizing it to Japan by using a traditional Japanese taiko drum, we found that, as expected, subjects could not play the drum well when their avatar was wearing a suit. However, when we used an avatar wearing a happi coat of the kind seen at Japanese festivals, the subjects swung their arms faster and were able to drum well. Then we introduced other people (avatars) who played the taiko along with the subject’s own avatar. When we did so, the way the subject played the drum changed according to the appearance of the people around them. Subjects were best at taiko drumming when they were wearing a happi and those around them were wearing suits. In this situation, the subject would drum with real gusto. When the subject was wearing a suit and those around them were wearing happi, the subject would play quietly. Thus, we ascertained that what has a bearing is not only the individual’s appearance, but also what could be termed social interaction.

One form of virtual world that has recently emerged is the metaverse, which is becoming an environment used with other people. When we use virtual spaces, how does giving ourselves new bodies and how everyone having bodies that differ from their flesh and blood incarnations alter our state of mind and performance? That is my current research topic.

Even without going as far as using avatars, interesting results are emerging from videoconferencing. We tried using a filter that changes the facial expressions of the meeting participants. When participants engaged in brainstorming while using a filter that made it appear as though everyone was smiling, the number of ideas generated was approximately 1.5 times higher than when no filter was used.

In augmenting human abilities, the key thing is the extent to which we can efficiently bring out the abilities people have in that actual situation, rather than increasing the abilities themselves. With the help of computers, we will support the creation of environments that enable humans to demonstrate their abilities more readily. I believe that doing so will expand the possibilities for us to fully demonstrate our abilities and live our lives in a manner true to ourselves.

Given recent calls highlighting the importance of diversity and inclusion, I am keen to continue undertaking research that will enable us to leverage VR-based human augmentation in order to enable everyone to live more enjoyable lives and play an active role in society while demonstrating their abilities in a manner true to themselves.